Interview with Matt Scholz, Automotive Business Leader, Radiant Vision Systems

A focus on safety systems amid the shift toward semi-autonomous vehicles has made in-vehicle sensing one of the emerging priorities for automotive innovators. Driver distraction and drowsiness lead to accidents that may be prevented with the help of sensing systems, which rely on several visual and biometric indicators to keep eyes on the road and hands on the wheel. In particular, driver monitoring systems (DMS) and occupant monitoring systems (OMS) that use near-infrared light (wavelengths outside of human visual perception) provide unobtrusive, remote sensing of a person’s presence, pupil position and gaze, eyelid movement, and other indicators of driver alertness and occupant safety. These systems are rapidly being implemented into new vehicles, with near-IR light source output a critical parameter for system performance.

Automotive Interiors World speaks to Matt Scholz, automotive business leader at Radiant Vision Systems, to discuss in-vehicle sensing applications and solutions for near-IR light measurement to ensure effective driver monitoring and identification.

What solutions does Radiant Vision Systems offer the automotive interiors market?

Radiant offers a variety of solutions for the auto interiors market. Our portfolio includes automated test and measurement systems and software designed to provide scientific data to guide design and manufacture of illuminated components like displays, backlit components and light sources. These light measurement solutions can be used for evaluating your traditional instrument cluster, your center stack, backlit icons like an HVAC or volume control, or more advanced systems that we’re starting to see in the automotive industry like head-up displays – both conventional and augmented-reality formats – and of course, light-based sensing like near-infrared (NIR) driver monitoring systems (DMS) and occupant monitoring systems (OMS).

Light-based technology is being implemented inside the vehicle in a variety of different ways. For DMS and OMS, we’re seeing a variety of implementation areas in the interior; along the headliner of the car, in the vehicle steering wheel column, in the center stack column and throughout the cabin. From a test hardware and software perspective, Radiant has positioned itself to be a turnkey solution for analysis in all areas of the vehicle, and at all stages of manufacturing – whether that’s at the development, the quality assurance or the end-of-line component and integration stage.

Is in-vehicle sensing a new market for Radiant?

Yes, in-vehicle sensing is a new market for Radiant in the sense that these systems haven’t been applied in many vehicles on a global scale yet. If you look at the high-end vehicle market, the German automotive marketplace and in some Japanese vehicles, in-vehicle sensing systems have been present for several years – going back over 10 years in some vehicles. But these have been the rare exception until recently.

In-vehicle sensing technology is really hitting its stride now, and with several regulations emerging to enforce sensing systems, we’re seeing an uptick in the development of this technology across different tiers. That growth has allowed Radiant to focus its time and technology to address this new type of application, driving new hardware and new software developments to ensure that we meet the diverse testing requirements of Tier 1, Tier 2 and OEM suppliers within the space.

What has Radiant been working on in the in-vehicle sensing space?

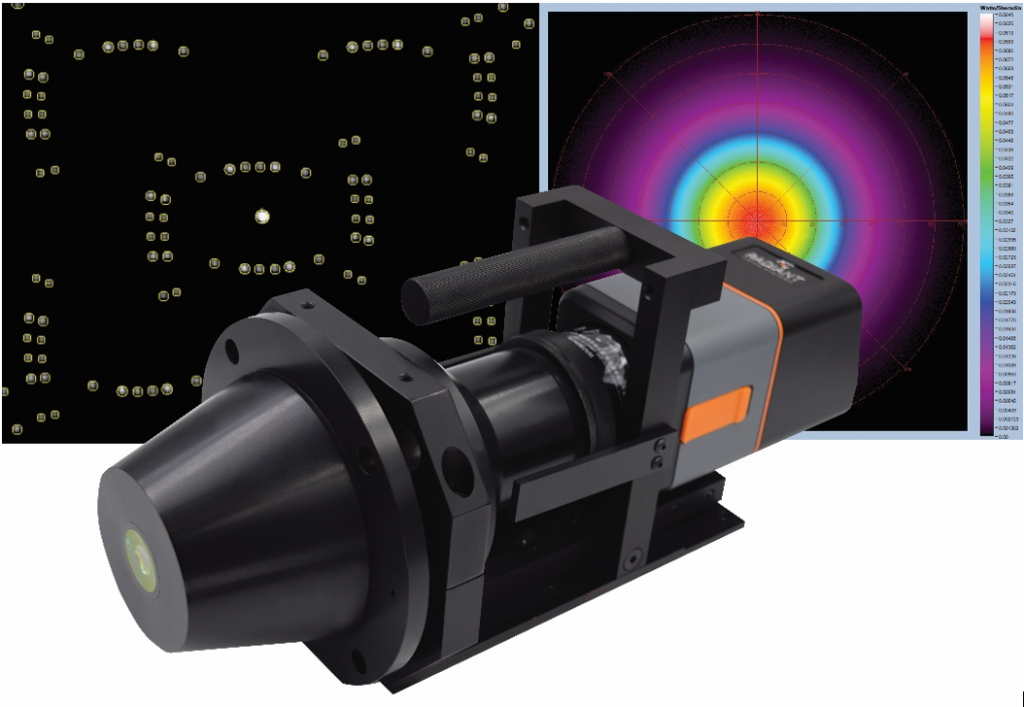

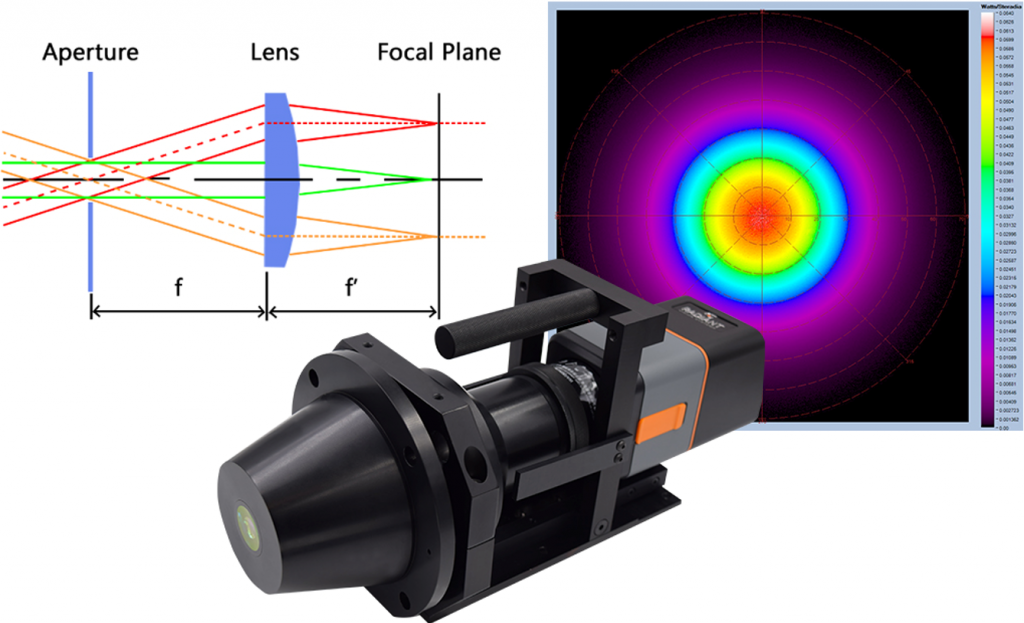

Radiant recently launched a unique camera/lens solution that is geared toward measuring near-infrared emitters, like LEDs and lasers used in near-IR sensing systems. We call it the NIR Intensity Lens, which is a conoscope lens applied in combination with a radiometric imager. This Fourier-optic solution attaches directly to our imaging system to measure the angular emission of a light source with optimal efficiency. What’s interesting about the lens is that we originally developed it for the consumer electronics market, where we are seeing near-IR as the primary wavelength for facial recognition – but of course, anything that happens in the consumer electronics market eventually bleeds over into automotive.

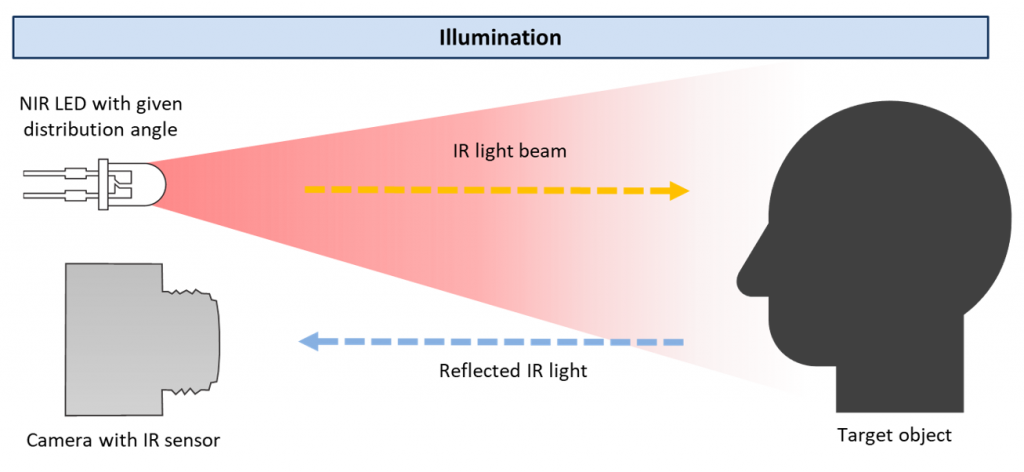

We’re now seeing a huge uptick in near-IR VCSEL (vertical-cavity surface-emitting laser) and LED sensing technologies inside the automotive cabin, which function by casting near-IR light into the cabin to enable IR-sensitive cameras to visualize automotive interiors and their occupants. With Radiant’s new lens, we’re able to quickly understand the distribution of these near-IR emitters – whether we’re looking at LED-based emitters or VCSEL emitters. The NIR Intensity Lens is used to quickly understand the performance of these light sources by mapping each source’s full distribution pattern in a single measurement image to measure its radiant intensity.

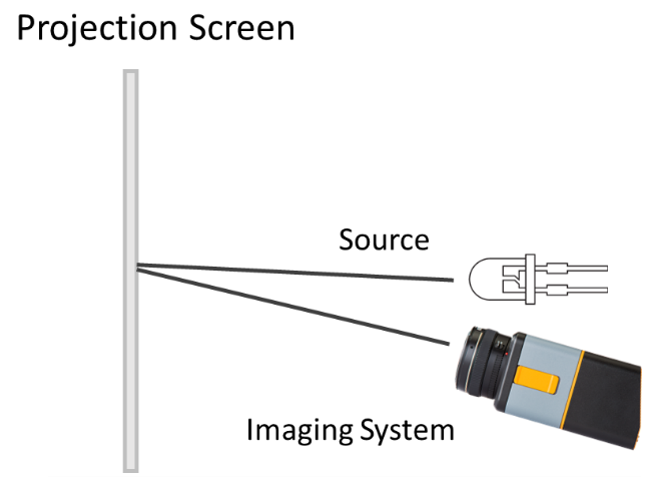

Radiant also offers IR filters for its portfolio of imaging systems (the Radiant Vision Systems ProMetric imaging system family) that can be integrated within each camera’s optical system to allow us to expand measurement capability outside of the visible spectrum and accurately measure near-IR light. Using one of these cameras with a standard Canon lens, we can look at the total distribution of a near-IR light source cast on a wall or screen to see how it maps out over a specific area – for example, the area intended to cover a human driver’s face in the vehicle.

Radiant’s measurement software is equally important for these test applications. We recently developed a new TT-NIRI Software module that provides a specific package of tests and test parameters for evaluating near-IR light. These include tests to evaluate angular distribution, time of flight (ToF) parameters, dot or structured light patterns from lasers, and other qualities to characterize the radiant intensity and geometry of near-IR light emissions. All of this information is really critical to understanding the technology that is being used in near-IR sensing applications.

Why are in-vehicle sensing and driver monitoring especially important today?

Why are in-vehicle sensing and driver monitoring especially important today?

Several regulations are being implemented and there is generally an emphasis on safety awareness in vehicle systems development. Driver distraction is a primary concern right now – ensuring that drivers are keeping their eyes on the road and maintaining safe control of the vehicle. But of course, safety extends beyond the driver, to the vehicle occupants. Some OEMs have formed their own standards to ensure safety from the passenger perspective, for instance, how many people are in the car, whether a child is left behind in a car if a driver leaves, etc.

At the legislative level, there are regulations emerging from both the USA and European Union. These include the EU General Safety Regulation that specifies DMS in new European vehicles as of 2022, and the SAFE (Stay Aware for Everyone) Act of 2020 that is similarly aimed at installing DMS in future vehicles in the USA.

We have reached a point where there’s simply no reason not to have systems like these, which so dramatically improve safety and reduce accidents on the road. It’s like brakes and side mirrors. At some point, these features became regulated, and now every vehicle must have them. Now we are seeing this enforcement of rearview or backup cameras, and it will be the same for DMS. There is just too much evidence pointing to the risks of driver distraction. Looking at your phone, looking away, talking to the passengers, especially with the advent of autonomous driving—these risks are increasing and DMS is an obvious solution.

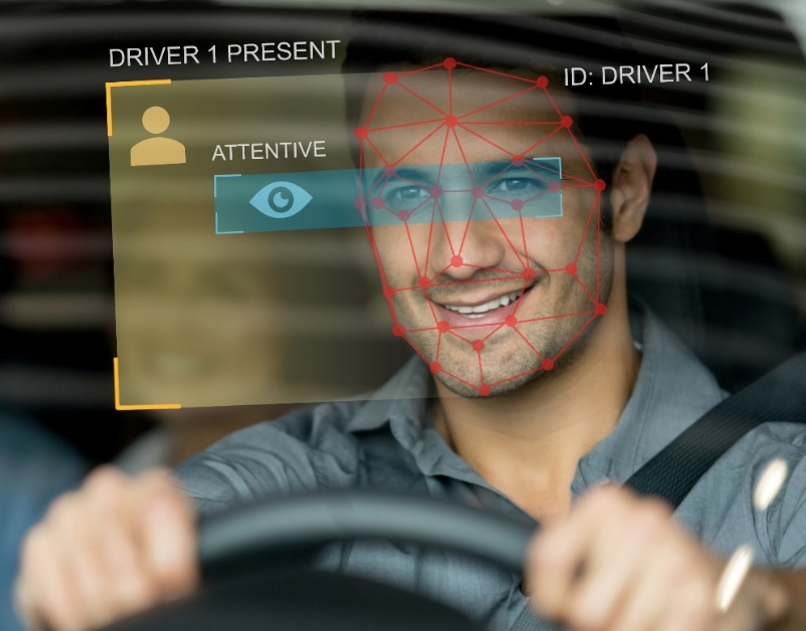

It is worth noting that, while the motivation behind in-vehicle sensing technology is primarily driver and occupant safety, of course, there are many luxury benefits that come along with it. You can imagine: You sit down in your car, the sensing technology scans your face, recognizes you, and says, “Hello, Matt Scholz, welcome to your car,” and begins to readjust the position of the driver seat, the steering wheel column and maybe even apply the light settings that I prefer. Auto makers can leverage sensing technology to add these enhancements in the future, with the regulation of DMS and OMS moving us further in this direction.

Do you see a lot of growth in this area with the introduction of AVs?

Yes, although it remains hard to say how AVs are really going to shape the auto industry. For fully autonomous vehicles, we might envision that our cars will be more like our living rooms. You’re not going to be driving anymore. Everyone might be sitting in a circle, facing each other, or facing other areas of the vehicle. The car may have displays integrated in more places, for example. And, likely, there will be more sensing technologies integrated in the vehicle to continue to monitor the vehicle itself and its occupants, providing alerts in case someone needs to take over control of the steering wheel if the autonomous aspects of the vehicle malfunction, there’s an emergency on the road, or other issues.

This remains unclear while there are no AVs on the road today – at least, not fully autonomous vehicles like we envision in the future. Instead, we currently have semi-autonomous features like the Tesla Autopilot or Cadillac Super Cruise system. In the former case, steering wheel sensors are used, and in the latter, both near-IR and steering sensors are used to ensure that the driver is paying attention and that there are appropriate warnings to return a driver’s gaze to the road or regain control of the vehicle. How that evolves as we go to Level 5 autonomy is unclear – but for the short term, systems need to ensure drivers remain attentive and in control, especially while they’re operating in the semi-autonomous situations we have today.

Can you tell us more about how in-vehicle sensing systems work?

There are two primary systems I mentioned for in-vehicle sensing – driver monitor systems, or DMS, and occupant monitoring systems, or OMS. Within these systems, various technologies are used to provide data for sensing. In the case of light-based systems, these are typically near-IR VCSELs, LEDs and IR cameras that monitor drivers or occupants within the vehicle.

But there are other types of in-vehicle sensors as well. For instance, thermal or infrared sensors, which monitor people’s heat signatures. There are also steering wheel sensors that can, in a basic sense, monitor pressure indicating driver control, or go beyond this with EKG functions to monitor heart rate. Other health and biometric monitoring systems include radar for detecting respiration and heart rate, for both DMS and OMS applications.

As a light-measurement provider, Radiant focuses on solutions for sensing technologies that use near-IR light sources in conjunction with IR cameras. Primary use cases of near-IR light in DMS and OMS applications include tracking eye and pupil detection, monitoring driver position, recognizing gestures and detecting occupant presence throughout the vehicle.

What do you think are the key benefits of near-infrared light for in-vehicle sensing?

As I mentioned, near-IR emitters have been used in vehicles for over a decade to provide remote and unobtrusive sensing. In the beginning, auto makers integrated these light sources in the steering wheel column – basically at the 12 o’clock signature line. They were typically using 890-nanometer (nm) light sources at this time, which unfortunately were obvious to the driver because the human eye can still see a very deep red glow emitting from 890nm sources. In direct view of the driver, especially at night, these light sources could be very distracting.

Near-IR light sources used today emit light at different wavelengths. As you move up the light spectrum (specifically, the spectrum of solar radiation), you get to a point around 930-950nm in the near-IR range where irradiance from ambient sunlight is absorbed, where light is not so damaging to the eye, and where light starts to fade from our physical visual perception. This means that if we use near-IR light sources with a signature of around 940nm, there is less interference from ambient sunlight, the light becomes basically invisible to the human eye, and you end up in a position where you can apply 940nm light at higher intensities without potential eye-safety risks.

Now as a manufacturer you can start to look at using more emitters or more intensity to flood the area that you’re interested in sensing, and that’s what generates a huge appeal for this wavelength of near-IR light. The lights can be used in both day and night without interference from sunlight and without being seen by the driver. There’s no glare, so there’s no driver distraction, which is why this wavelength has been so interesting to use both in consumer electronics and now the automotive industry.

Applications of 940nm sources for near-IR sensing in the consumer electronics market – that is, facial recognition in smartphones and laptops, or eye tracking in new virtual and augmented reality devices – show there is enough data to support using this same wavelength for in-vehicle sensing. With these studies already complete, it’s very easy for the auto industry to take 940nm LEDs and lasers on board because they don’t have to vet these systems against a full set of regulatory requirements ahead of time. They can take this technology that’s already well-developed and standardized and start implementing it to meet applications within the auto market.

Are there different applications for near-infrared lasers versus LEDs?

Looking at the beams and distribution patterns of these two light sources, it is clear why they might be used differently. A near-IR LED produces a very broad diffused distribution of light, whereas lasers produce a narrow beam, like a point source. There are different elements that you can apply to a laser source to diffuse that light and spread it like the distribution of an LED. However, LEDs are used in more areas of the vehicle interior at this time. Generally, the reasons for LEDs’ popularity are that most in-vehicle sensing applications can be accomplished sufficiently with LEDs, they produce a wide distribution of light that allow IR cameras to ‘see’ more of the interior, and these light sources can be implemented much more cost-effectively than lasers.

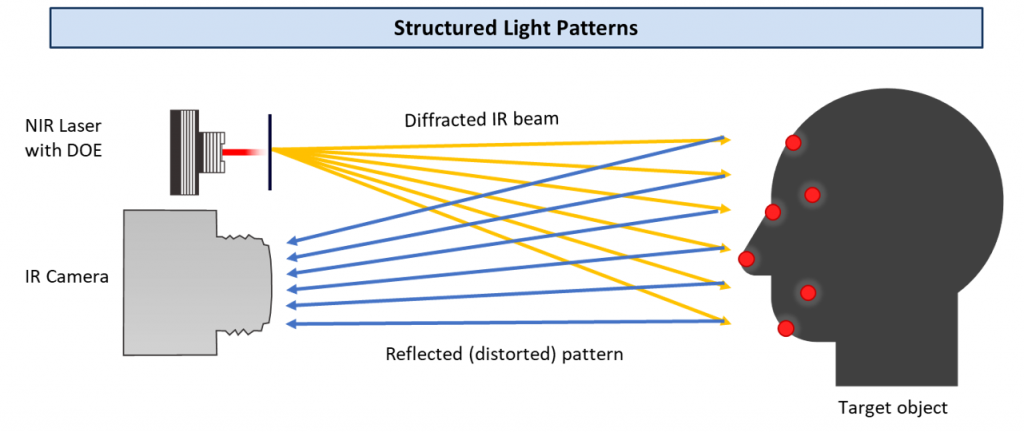

On the other hand, as we start to look at applications that rely on identifying 3D qualities of an object, we’re seeing that lasers with diffractive optical elements, or DOE, offer greater precision because they can produce specific patterns with thousands of discrete points of information. What we are talking about here is a diffracted pattern of laser light, also called structured light or a dot pattern, that is cast and reflected off a 3D surface. Deformations in the reflected pattern are interpreted by the sensing system to create a map of the 3D surface with accurate depth and shapes. This is primarily beneficial if you are looking to identify the unique characteristics of an object like the curvature of a person’s face for facial recognition. When you are looking to generally monitor relative eye, head and body positions indicating user distraction (as in DMS), LED sources are still a great fit and carry a lower cost for implementation. However, both sources have been proven in the marketplace to service various needs.

What are some of the considerations for ensuring the performance of a near-infrared sensing system?

For in-vehicle sensing applications, you’re likely looking at measuring near-IR light sources to ensure sufficient radiant intensity to enable the various capabilities of the sensing system. You may want to understand the distribution of a single emitter, or multiple emitters, to cover a specific area from a specific distance. You may want to stack multiple emitters and understand how to safely accomplish a larger flood of light and take in more information. In all these applications, you want to verify the light source’s intensity and distribution so there is safe and sufficient near-IR light in the flooded area, for instance, the driver’s face. This ensures the IR cameras built into these cars can reliably detect light reflected off the driver or occupants, so the sensing system can provide critical information.

To characterize the output of a near-IR light source, you first need a technology that can operate within that wavelength, so you need to be looking at radiometric systems that detect light beyond the visible spectrum, typically above 750nm. Near-IR light sources have ranged from 890nm to now pushing 1,050nm. Again, what we’re seeing in the automotive interior is standardizing around 940nm. In all near-IR measurement applications, however, the important thing is that you need a piece of technology that can measure the wavelength of the source you are looking to characterize.

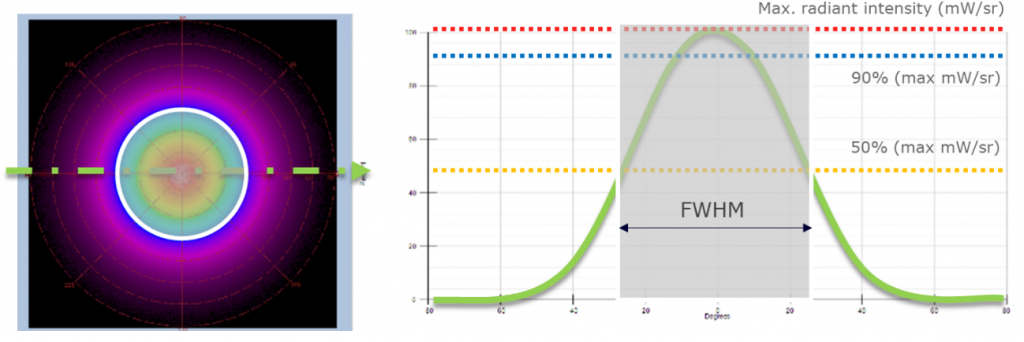

After choosing a system for your specific wavelength, you need to be able to characterize the light source in various ways to best understand how the source meets the application need. Typically, this includes evaluating the geometric distribution of the source and measuring the output (radiant intensity) across or at different points of this distribution. For instance, measuring the maximum intensity of the light source emission, the full-width-half-max (FWHM) intensity value of the distribution, or the uniformity of the intensity across the distribution (checking for intensity fall-offs or hot-spots). Being able to draw that information out from the measurement system and software is critical for understanding how the light source integrates with other components of the sensing system, for ultimate performance.

Are there different measurement methods for addressing these performance requirements?

Yes, there are a variety of methods. As I mentioned, Radiant offers different lens options to measure the light source distribution – whether that’s our NIR Intensity Lens solution or a traditional Canon lens paired with a radiometric camera. In both cases, we rely on imaging using a radiometric camera to capture the entire distribution of a light source in a single measurement image for comprehensive analysis and optimal efficiency.

In the case of the NIR Intensity Lens, this solution is pointed directly at the near-IR emitter to capture its angular output. Light emissions out to ±70° are redirected by the lens’s Fourier optics to points on the camera’s internal sensor to create a polar plot of angular intensity data, visualized as a 2D image. Using this solution, you can measure across the light source’s entire angular distribution at once. You can look at the uniformity across the distribution, measure the radiant intensity is at a specific solid angle, and then look at how that intensity drops off as you move to wider angles. For instance, a source could be measured directly, with the measurement system aligned to the light source perpendicularly.

Without rotating the measurement system or source, you could measure how the intensity of the distribution falls off as the emission angle becomes more extreme. You may want to map this fall-off to understand where the light source is producing the appropriate amount of intensity for the sensing system to detect – whether that’s at 10° distribution, 20° distribution, 30° distribution, and so on. Mapping the angular output in terms of scope and intensity is one key way of ensuring the performance of sensing systems that rely on IR cameras to receive light from a number of angles reflected from an object.

Of course, you may also care about what the intensity of a light source is at various distances. Are you getting enough light at 1ft, 3ft, 5ft, 6ft? If we think about the driver position, we’re generally talking about sensing an object 3ft away from where the sensing technology is going to be in the dashboard or steering column. An indirect measurement method could be used to give you information about the light source distribution, in terms of its shape and size at different distances or positions. Using a traditional Canon lens and radiometric camera, the measurement system can be pointed toward the distribution of a light source cast on a wall, allowing characterization of the source’s overall distribution area. Understanding how the intensity may be dropping off at further distances or how the spread and spot size changes – as opposed to looking at the angular output – may be another important performance parameter for the sensing system.

Of course, having the proper measurement tool and analysis software is critical. A measurement system must accommodate the wavelength and distribution geometry of the source you are looking to measure. Then, additional considerations may be made for the sensing application, or for the stage in the manufacturing process at which you are incorporating measurement. Radiant brings a range of solutions to the table and makes performance testing easy to achieve at Tier 1, Tier 2, or OEM phases.

What other new technologies do you believe will affect the automotive interior in the future?

The big thing that we’re going to see, in my opinion, is a transition to additional camera monitor system (CMS) technology. This means bringing more display technology into the passenger and driver side doors, removing your traditional sideview mirrors, and instead using cameras that give you a much larger field of view of the outside of the vehicle to reduce blind zones. A lot of accidents happen because people aren’t paying attention to their blind zones when they’re driving the car. It’s proven that CMS removes much of that risk by bringing video feeds of the vehicle exterior directly inside the car.

The other big change comes with augmented reality. We see a shift in this space already for head-up display technologies. If we follow the current trend, one day you’re going to see head-up display integrations where the entire windshield basically acts as a sensor-based display, with virtual images dynamically responding to external elements. These elements could be pedestrians, vehicles, stores, restaurants, or any points of interest that you might want to highlight. That information is going to be fed into the vehicle, and with that a lot more information is going to be thrown in the field of view of drivers and occupants, giving sensing an even more important role to play.

Lastly, we’re going to see much larger displays inside the vehicle. That shift is already happening where new vehicles are beginning to feature 30in to 45in displays, curved displays and multi-panel displays. It’s clear we are observing an intersection of maximizing information and ways to interface with the vehicle, while at the same time trying to remove driver distraction. And that links back to why DMS and OMS will be so critical. Because we’re going to bring all these new technologies into the car, we need to make sure that the driver is still staying focused on the road. It’s all going to tie together in a fundamental way that will improve technology universally throughout the vehicle interior.

Matt Scholz, automotive business leader, Radiant Vision Systems.

Matt Scholz, automotive business leader, Radiant Vision Systems.